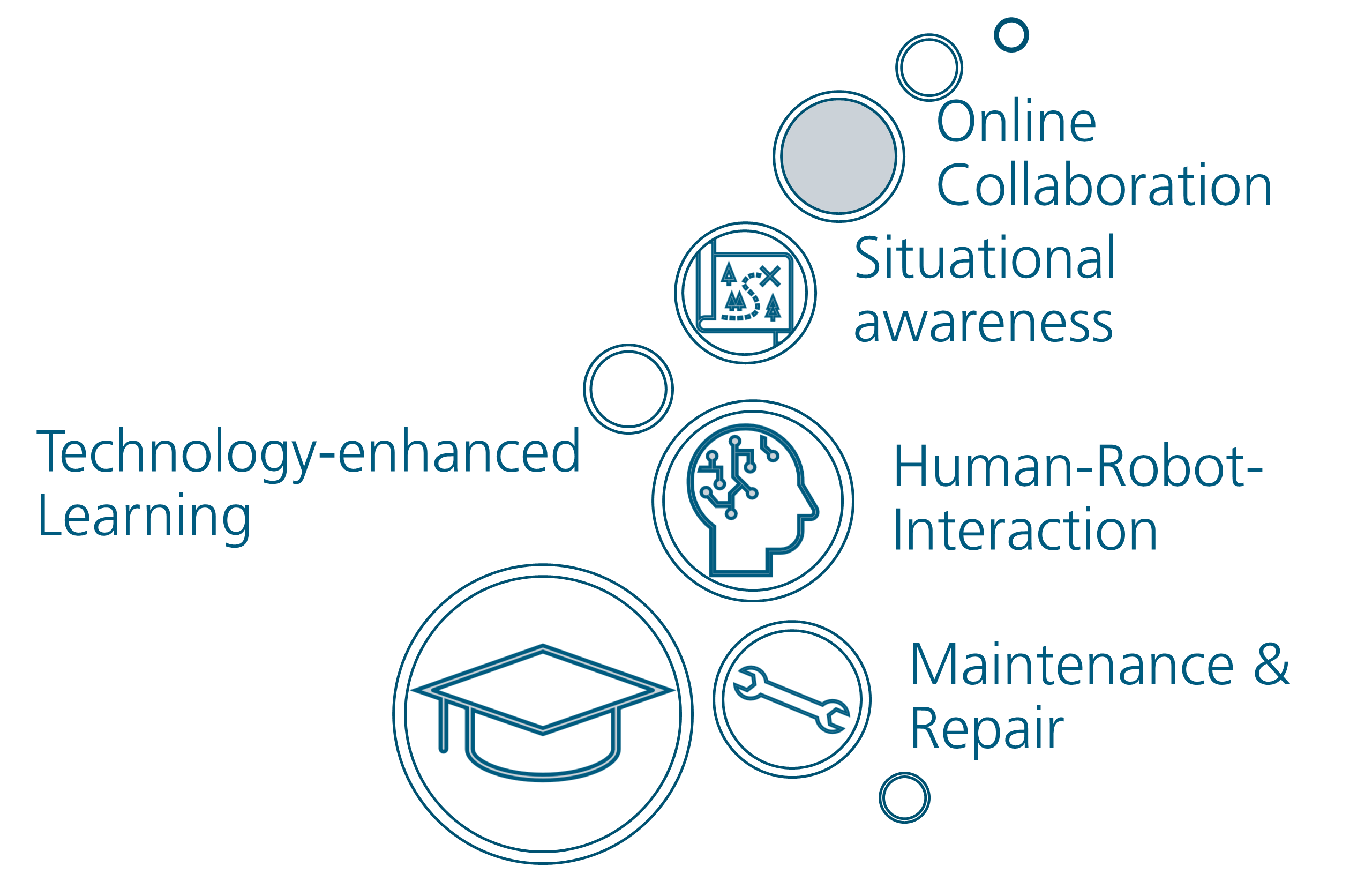

The human-centered design of virtual reality (VR) and augmented reality (AR) applications is at the core of the research area. Special emphasis is placed on the exploration of new design and interaction paradigms for three-dimensional interfaces. Natural interaction techniques as well as the use of different modalities such as gesture, voice or gaze control, navigation in virtual environments, technology-enhanced learning, multi-user AR/VR and interaction with autonomous systems are of particular importance as research topics.

The team ensures the suitability of the developed design solution through systematic, context-specific evaluations with the help of eventual users. In this way, the applications can be tested for objective performance measures as well as subjective metrics. The insights gained from this are incorporated into the solutions, which are thus iteratively improved and can thus optimally support users in performing complex tasks.

Extensive methodological expertise is leveraged: In the analysis phase, the approach covers, for example, the development of personas, user journey maps or scenarios. During development, current industry solutions as well as expertise in the area of 3D engines, for example, come into play. This enables efficient implementation of the design solutions, for which modern hardware is used and dedicated solutions are developed. User behavior is then captured in controlled environments and statistically evaluated.